The Poverty of Longtermism

Why longtermism isn't an idea that could save one hundred billion trillion lives (Part 4/4)

In my view, the key point is that climate change and AI safety differ in degree but not in kind.

- Max Daniel, in response to A Case Against Strong Longtermism

Contents

See Also

In 1957, Karl Popper proved it is impossible to predict the future of humanity, but scholars at the Future of Humanity Institute insist on trying anyway. And in so doing, they have created a philosophy called longtermism, which purports to be “an idea that could save 100 billion trillion lives” and one which claims that even a single second’s delay results in “10 000 000 000 human lives lost”. I believe, however, that longtermism is both mistaken and dangerous, and in the concluding post of this series of critiques of longtermism, I will focus on Sir Popper’s proof, and discuss its relevance to the subject at hand.

If you recall, in A Case Against Strong Longtermism, I referenced the preface of The Poverty of Historicism, where Popper establishes the impossibility of predicting the long-term future of humanity. (What is meant by “historicism” will be clarified below, but for now think of it simply as “a desire to predict the future.”)

He writes:

I have shown that, for strictly logical reasons, it is impossible for us to predict the future course of history… I propose to give here, in a few words, an outline of this refutation of historicism. The argument may be summed up in five statements, as follows:

- The course of human history is strongly influenced by the growth of human knowledge. (The truth of this premise must be admitted even by those who see in our ideas, including our scientific ideas, merely the by-products of material developments of some kind or other.)

- We cannot predict, by rational or scientific methods, the future growth of our scientific knowledge. (This assertion can be logically proved, by considerations which are sketched below.)

- We cannot, therefore, predict the future course of human history.

- This means that we must reject the possibility of a theoretical history; that is to say, of a historical social science that would correspond to theoretical physics. There can be no scientific theory of historical development serving as a basis for historical prediction.

- The fundamental aim of historicist methods (see sections 11 to 16 of this book) is therefore misconceived; and historicism collapses.

The argument does not, of course, refute the possibility of every kind of social prediction; on the contrary, it is perfectly compatible with the possibility of testing social theories - for example, economic theories - by way of predicting that certain developments will take place under certain conditions. It only refutes the possibility of predicting historical developments to the extent to which they may be influenced by the growth of our knowledge.

The decisive step in this argument is statement (2). I think that it is convincing in itself: if there is such a thing as growing human knowledge, then we cannot anticipate today what we shall know only tomorrow.1

- The Poverty of Historicism, p.9 (emphasis added)

The proof itself is contained within The Open Universe: An Argument for Indeterminism (see informal write-up here), where credit for the proof technique is attributed to “personal communication received about 1950 from the late Dr A. M. Turing.”

As it turns out, people on the EA Forum didn’t like Popper’s proof all that much. I will take as a representative selection the comments from Max Daniel, scholar at the Future of Humanity Institute (FHI) and progenitor of the Jon Hamm S-Risk Scenario, who raised a number of interesting objections to Popper’s claim. I will summarize his comments here, but make sure to read his original posts in full (one, two, three).

~

Claim: The future course of human history is influenced by the growth of human knowledge. But we cannot predict the growth of human knowledge. Therefore we cannot predict the future course of human history.

Response: The fields of astronomy and climatology have established that at least some form of long-run prediction is possible. So the question is whether longtermism is viable with the sort of predictions we can currently make, or if it requires the kind of predictions that aren’t feasible. Why can’t longtermists also “predict that certain developments will take place under certain conditions?” [one]

Popper’s proof may be true for a certain technical definition of “predicting”, but it can’t be true in the everyday sense, which is arguably the one relevant to decision making. Consider that social, economic, and technological development are paradigmatic examples of things that would be “impossible to predict” in Popper’s sense. Does it follow that we should do nothing because it’s “impossible to predict the future”? I find it hard to see why there would be a qualitative difference between longtermism and climate change which implies that the former is infeasible while the latter is worthwhile. [two]

The key point is that climate change mitigation and AI safety differ in degree, not in kind. That is to say, while predicting the arrival of misaligned transformative AI may be more challenging than predicting global climate in the year 2100, it’s not a different ‘kind’ of problem, just more difficult. [three]

But in both cases we can make use of laws and trends. In the case of climate change, we can extrapolate current sociological trends forward in time, making statements like “if current data is projected forward with no notable intervention, the Earth would be uninhabitable in x years.” And similarly, in the case of AI alignment, we can say things like “if Moore’s Law continued, we could buy a brain-equivalent of compute for X dollars in Y years.” [three]

And whether we like it or not, we have to quantify our uncertainty. We have to confront the “less well-defined” and “more uncertain” aspects of our future, because these less well-defined issues will make a massive difference to how some harm affects different populations. After all, it’s not that much use if I can predict how much warming we’d get by 2100 if a misaligned AI system kills everyone in 2050. [three]

On the other hand, planning based only on “well-defined or “predictable” short-term predictions has a pretty terrible track record, as the cases of The Population Bomb and The Limits to Growth demonstrate. In expected value terms, the utility of mitigating an existential threat compensates for the highly uncertain nature of long-term forecasts. Short-term forecasts, however, have no such guarantee. Thus, if we are interested in doing the most amount of good possible, long-term forecasts remain, according to longtermism, not only the most fascinating, but actually the only forecasts worth attempting. [three]

To summarize: If it is possible for climatologists to predict climate change, why is it not it possible for longtermists to predict the superintelligence revolution?

Overview

In order to respond to Max, it is worth disentangling two distinct threads of his argument. Defenses of longtermism tend to come in two flavors:

- Pro-science defenses of longtermism are optimistic, and in favor of the scientific method, arguing that predicting human history is no different than any other “kind” of scientific prediction, except perhaps more difficult.

- Anti-science defenses of longtermism are pessimistic, and instead tend to denigrate the scientific method, highlighting its failures while emphasizing that short term prediction is just as - indeed, often more - error prone than long term prediction.

The distinction between the pro-scientific and anti-scientific case for longtermism is important to keep in mind, because often proponents of longtermism will make both cases simultaneously.

So on one hand, for instance, we’re told that science is able to do wonderful things, like bringing about a technological singularity or forecasting the global climate eighty years from now (pro-science). On the other hand, however, we’re told that we’re utterly clueless when making even the most basic short-term predictions, and examples like The Population Bomb or Limits To Growth clearly demonstrate that planning for the future has a terrible track records (anti-science).

The various threads of his argument can be summarized with the help of a little table:

| pro-science | anti-science |

|---|---|

| We can predict climate change, thus we can predict super-intelligence (“degrees not kind” argument) | We’re clueless about everything except longtermism (“cluelessness” argument) |

| We can use laws and trends to make long-term predictions (“laws/trends” argument) | We have a terrible track record of planning based on short-term predictions (“we have no choice” argument) |

In Trend Is Not Destiny, I respond to the pro-science arguments, addressing the The Population Bomb / Limits to Growth examples, and show climate science and AI safety research are indeed different ‘kinds’ of problems, not simply a matters of degree,

In Not-so-complex cluelessness, I respond to the anti-science arguments by critiquing Hilary Greaves’ idea of “complex cluelessness”, which underlies many of the anti-science arguments for longtermism, and has been used to attack empirical, data-driven charities like GiveWell.

But before responding to the forum, it is worth stepping back for a moment and reminding ourselves about just what exactly historicism is, and why the Viennese philosopher dedicated his life to fighting against it. Because, lest we forget, the event which instigated the publication of Poverty, was Anschluss, Germany’s 1938 annexation of Popper’s country of birth.

The Lesson of the 20th Century

In memory of the countless men, women and children of all creeds or nations or races who fell victims to the fascist and communist belief in Inexorable Laws of Historical Destiny.

- The Poverty of Historicism, dedication

“What I mean by ‘historicism’” Popper writes, “is an approach to the social sciences which assumes that historical prediction is their principal aim, and which assumes that this aim is attainable by discovering the ‘rhythms’ or the ‘patterns’, the ‘laws’ or the ‘trends’ that underlie the evolution of history”.

Earlier, I said historicism could be thought of as “a desire to predict the future”, but from Popper’s definition above, we see there’s a little more to it than that. First, historicism isn’t about predicting the future of just anything, it’s about predicting the future of something very specific: the future of humanity. This is what Popper means by the oxymoronic phrase historical prediction - long-term, large-scale, civilization-level predictions, like the apocryphal Mayan prediction about civilization ending in the year 2012. This is also what Popper proved to be impossible.

The second major component of historicism is its relationship to the sciences. Unlike mystics, new-agers and supernaturalists, historicists base their predictions on a close study of history, using tools from the physical sciences to uncover the” ‘rhythms’ or the ‘patterns’, the ‘laws’ or the ‘trends’ that underlie the evolution of history.” That is, historicists believe their predictions are based on science, and this is what gives them so much confidence.

A prominent example of historicism is Scientific Socialism, (also known as historical materialism), which is “a method for understanding and predicting social, economic and material phenomena by examining their historical trends through the use of the scientific method in order to derive probable outcomes and probable future developments”. According to Scientific Socialism, history is heading towards a “higher stage of communism”, a Utopia in which which nationality, sexism, families, alienation, social classes, money, property, commodities, the bourgeoisie, the proletariat, division of labor, cities and countryside, class struggle, religion, ideology, and markets have all been abolished and (note the jargon):

after the enslaving subordination of the individual to the division of labor, and therewith also the antithesis between mental and physical labor, has vanished; after labor has become not only a means of life but life’s prime want; after the productive forces have also increased with the all-around development of the individual, and all the springs of co-operative wealth flow more abundantly—only then can the narrow horizon of bourgeois right be crossed in its entirety and society inscribe on its banners: From each according to his ability, to each according to his needs!

Historicism also has a tendency of spurring people into action. In an interview with Giancarlo Bosetti, Popper explains why:

Giancarlo Bosetti: In your autobiography you describe how you eventually “grasped the heart of the Marxist argument”. “It consists”, you wrote, “of a historical prophecy, combined with an implicit appeal to the following moral law: Help to bring about the inevitable!”. Can you say a little more about this idea of a ‘trap’?

K.R.P: Communist doctrine, which promises the coming of a better world, claims to be based on knowledge of the laws of history. So it was obviously the duty of everybody, especially of somebody like me who hated war and violence, to support the party which would bring about, or help to bring about, the state of affairs that must come in any case. If you knew this yet resisted it, you were a criminal. For you resisted something that had to come, and by your resistance you made yourself responsible or co-responsible for all the terrible violence and all the deaths which would happen when communism inevitably established itself. It had to come; it had to establish itself. And we had to hope there would be the minimum resistance, with as few people sacrificed as possible.

So everyone who had understood that the inevitability of socialism could be scientifically proved had a duty to do everything to help it come about. …It was clear that people, even leaders, must make mistakes - but that was a small matter. The main point was that the Communists were fighting for what had to come in the end. That was what I meant by speaking of a trap, and I was myself for a time caught in the trap.

- The Lesson of this Century, p.17,

The danger of the historicist trap - that is, the moral injunction to work on nothing but bringing about that which is destined to come in any case - is that the claim to know the future empties the present of moral responsibility, giving us explicit and direct permission to ignore present-day suffering. Why worry about trivialities like fighting disease, poverty, war, and violence, when we know (in expectation, of course) that some day a super-intelligence will arrive and solve all these problems for us? What could be more important than bringing about this inevitability? What could be more important than bringing about this inevitability safely?

And if you too know this, and yet resist it, you too are a moral criminal. For you resist something that has to come, that has to establish itself, and by your resistance you make yourself responsible, or co-responsible, for all the terrible violence and all the deaths which will happen when mankind delays colonizing our local supercluster. One hundred billion trillion deaths, to be exact, at a rate of ten trillion human deaths per second.

~

Safely bringing about the inevitable “final stage” is the driving force for the historicist, who believes they have an urgent moral obligation to drop everything and help ‘lesson the birth pangs’ as they usher humanity towards Utopia.

According to Toby Ord, author and philosopher at Oxford’s Future of Humanity Institute, the Utopia is known as The Long Reflection. We enter The Long Reflection only once we have “achieved existential security”, only then can we begin to “reflect on what we truly desire”. In The Precipice: Existential Risk and the Future of Humanity, Ord writes:

If we achieve existential security, we will have room to breathe. With humanity’s longterm potential secured, we will be past the Precipice, free to contemplate the range of futures that lie open before us. And we will be able to take our time to reflect upon what we truly desire; upon which of these visions for humanity would be the best realization of our potential. We shall call this the Long Reflection. … The ultimate aim of the Long Reflection would be to achieve a final answer to the question of which is the best kind of future for humanity.

- The Precipice: Existential Risk and the Future of Humanity, p.185 - 186 (emphasis added)

The moral injunction to drop everything and usher humanity through its “steps” follows shortly after:

We could think of these first two steps of existential security and the Long Reflection as designing a constitution for humanity. Achieving existential security would be like writing the safeguarding of our potential into our constitution. The Long Reflection would then flesh out this constitution, setting the directions and limits in which our future will unfold.

Our ultimate aim, of course, is the final step: fully achieving humanity’s potential. But this can wait upon a serious reflection about which future is best and on how to achieve that future without any fatal missteps. And while it would not hurt to begin such reflection now, it is not the most urgent task. To maximize our chance of success, we need first to get ourselves to safety - to achieve existential security. This is the task of our time. The rest can wait.

- The Precipice: Existential Risk and the Future of Humanity, p.185 - 186 (emphasis added)

The rest can wait. Popper recognized that the historicist trap often appears - or reappears, rather - in many guises. Earlier I noted that arguments for longtermism come in both a pro-science and anti-science flavor. I stole this observation from Popper, who instead used the terminology of “pro-naturalistic” and “anti-naturalistic”, and in Section II: The Pro-Naturalistic Doctrines of Historicism, explained how proponents of historicism will often make both cases simultaneously:

Modern historicists have been greatly impressed by the success of Newtonian theory, and especially by its power of forecasting the position of the planets a long time ahead. The possibility of such long-term forecasts, they claim, is thereby established, showing that the old dreams of prophesying the distant future do not transcend the limits of what may be attained by the human mind. The social sciences must aim just as high. If it is possible for astronomy to predict eclipses, why should it not be possible for sociology2 to predict revolutions?

Yet though we ought to aim so high, we should never forget, the historicist will insist, that the social sciences cannot hope, and that they must not try, to attain the precision of astronomical forecasts. An exact scientific calendar of social events, comparable to, say, the Nautical Almanack, has been shown (in sections 5 and 6) to be logically impossible. Even though revolutions may be predicted by the social sciences, no such prediction can be exact; there must be a margin of uncertainty as to its details and as to its timing.

While conceding, and even emphasizing, the deficiencies of sociological predictions with respect to detail and precision, historicists hold that the sweep and the significance of such forecasts might compensate for these drawbacks. … But although social science, in consequence, suffers from vagueness, its qualitative terms at the same time provide it with a certain richness and comprehensiveness of meaning. Examples of such terms are ‘culture clash’, ‘prosperity’, ‘solidarity’, ‘urbanization’, ‘utility’.

Predictions of the kind described, i.e. long-term predictions whose vagueness is balanced by their scope and significance, I propose to call ‘predictions on a large scale’ or ‘large-scale forecasts’. According to historicism, this is the kind of prediction which sociology has to attempt.

On the other hand, it follows from our exposition of the anti-naturalistic doctrines of historicism that short-term predictions in the social sciences must suffer from great disadvantages. Lack of exactness must affect them considerably, for by their very nature they can deal only with details, with the smaller features of social life, since they are confined to brief periods. But a prediction of details which is inexact in its details is pretty useless. Thus, if we are at all interested in social predictions, large-scale forecasts (which are also long-term forecasts) remain, according to historicism, not only the most fascinating but actually the only forecasts worth attempting.

- The Poverty Of Historicism, p.39 (emphasis added)

So what is the response to the historicist? What is the response to Max?

Trend Is Not Destiny

And with this question in mind, we can finally turn to addressing the forum’s response to Popper’s proof.

To the claim that climate prediction and AI prediction simply differ in “degree” not “kind”, I respond: I kindly disagree. The qualitative difference between the two is that the former is based on laws, while the latter is based (merely) on trends. It is worthwhile, therefore, to clearly distinguish between the two, because, as Popper observes, trends and laws are radically different things3.

Let’s first look at laws. Their central property (relevant for our purposes) is that laws are universal, meaning they hold everywhere and always, and can therefore be used as the basis for long-term prediction. Why? Because laws can be safely assumed to hold in the long term distant future. This is the reason why long-term astronomical predictions are successful - they make use of physical laws (specifically, laws of gravitation) and weak assumptions4, which taken together allow us to predict, for example, that Halley’s Comet will reoccur mid-2061, or that in one million years, Desdemona will collide with Cressida, leaving Uranus with two fewer moons and Othello with nothing to do.

The use of physical laws is what allows for successful climate predictions, which use, for example, the Greenhouse Effect and the Clausius–Clapeyron relation, as well as knowledge about water vapor, carbon dioxide, methane, ozone, cloud formation, and so on. I discuss the climate change example in greater detail in the appendix below.

The key point is that laws grant us predictive power, and this is why the historicist wants so badly to believe in laws of history. For example, longtermists believe in The Law of Inevitable Technological Progress, which says that GPT-3 is one step closer to super-intelligence than GPT-2, and that each and every day, we march ever forward, forward towards The Technological Singularity, which, according to Nick Bostrom, should arrive sometime around 2040 - 2050.

What about trends? The key property of trends (relevant for our purposes) is that unlike laws, trends stop.

The central mistake of historicism, aptly described by a fellow blogger writing on the same subject: Trend Is Not Destiny.

Nothing guarantees a trend, like the rise in the price of bitcoin or a bi-annual doubling of the number of transistors on a circuit board, will hold absolutely, universally, unconditionally forever, like a law does, as in “if current data is projected forward with no notable intervention, the Earth would be uninhabitable in x years”, or “if Moore’s Law Trend continued, we could buy a brain-equivalent of compute for X dollars in Y years”.

This is what Jordan Ellensburg calls “false linearity” - the mistake of assuming a trend will continue forever. As Ellensburg notes, false linearity is the cause of headlines like “All Americans will be obese by 2048!” or “Halving your intake of Vitamin B3 doubles your risk of athlete’s foot!”. If your child grows one foot tall their first year, and two feet tall their second, and you forecast they will be fifty feet tall on their fiftieth birthday, that is the fallacy of false linearity.

False linearity seems to be a mistake people frequently make5. The most famous example is of course Malthusianism, with its particular focus on population and resource trends. The Population Bomb and The Limits to Growth aren’t examples of why we should avoid “well-defined or “predictable” short-term predictions, as Max would have us believe - they’re examples of what happens when you treat trends as laws, and extrapolate. From wikipedia’s article on The Population Bomb:

Economist Julian Simon and medical statistician Hans Rosling pointed out that the failed prediction of 70s famines were based exclusively on the assumption that exponential population growth will continue indefinitely and no technological or social progress will be made.

Or how about trends in resource production and consumption? See if you can spot the error in reasoning drawn by the research team behind The Limits to Growth:

After reviewing their computer simulations, the research team came to the following conclusions:

- Given business as usual, i.e., no changes to historical growth trends, the limits to growth on earth would become evident by 2072, leading to “sudden and uncontrollable decline in both population and industrial capacity”. (…)

- Growth trends existing in 1972 could be altered so that sustainable ecological and economic stability could be achieved.

- The sooner the world’s people start striving for the second outcome above, the better the chance of achieving it.

And indeed, Popper identifies the confusion between laws and trends as the central mistake of historicism:

This, we may say, is the central mistake of historicism. Its “laws of development” turn out to be absolute trends; trends which, like laws, do not depend on initial conditions, and which carry us irresistibly in a certain direction into the future. They are the basis of unconditional prophecies, as opposed to conditional scientific predictions. …

[Imagining his favorite trend will stop] is just what the historicist cannot do. He firmly believes in his favourite trend, and conditions under which it would disappear are to him unthinkable. The poverty of historicism, we might say, is a poverty of imagination. The historicist continuously upbraids those who cannot imagine a change in their little worlds; yet it seems that the historicist is himself deficient in imagination, for he cannot imagine a change in the conditions of change.

- The Poverty Of Historicism, p.105-106 (emphasis added)

So this is what makes long-term predictions in the case of AI safety, and longtermism writ-large, a qualitatively different “kind” of problem than long-term predictions in the astronomical or climatological setting. The former are based on trends, which can stop, reverse, or change entirely at any arbitrary point in the future, and the latter are based on laws, which can’t, and don’t.

In other words: Trend is not destiny.

So what you’re saying is …

To anticipate the “So you’re saying you can’t predict anything?” critique, it is probably worth clarifying what I’m not saying. I’m certainly not saying that all predictions about the future are equally useless (see also: Proving Too Much), nor am I denying that there is a significant difference between reasonable predictions (“the sun will not explode tomorrow”) and completely nonsensical predictions (“we will have AGI in the next 50 years.”). My attack, and Popper’s proof, is with regards to a narrow kind of long-term prediction, namely, those about the future of the human species.

Another thing I’m not saying is that one can never extrapolate trends - only that one should be careful not to extrapolate trends arbitrarily far into the future. Here’s a simple heuristic: If you read work that contains gargantuan numbers and outlandish predictions, look for limitless trend extrapolation.

As an example, we turn to the recently-updated The Case For Strong Longtermism (Version 2!).

It’s taken me so long to get the final post of this series out that the authors have made significant changes their original working draft in the meantime, removing the Shivani example and the suggestion that “we can in the first instance often simply ignore all the effects contained in the first 100 (or even 1000) years”.

Perhaps most significantly, the authors have changed their initial assumptions. Previously the authors had assumed “the future will contain at least 1 quadrillion (\(10^{15}\)) beings in expectation”, but in the latest draft, this assumption has been “precisified” in the form of six, count ‘em, future scenarios:

| Scenario | Duration (centuries) | Lives Per Century | Number of Future Lives |

|---|---|---|---|

| Earth (mammalian reference class) | $10^{4}$ | $10^{10}$ | $10^{14}$ |

| Earth (digital life) | $10^{4}$ | $10^{14}$ | $10^{18}$ |

| Solar System | $10^{8}$ | $10^{19}$ | $10^{27}$ |

| Solar System (digital life) | $10^{7}$ | $10^{23}$ | $10^{30}$ |

| Milky Way | $10^{11}$ | $10^{25}$ | $10^{36}$ |

| Milky Way (digital life) | $10^{11}$ | $10^{34}$ | $10^{45}$ |

One is meant to use the equation \(\text{centuries} \times \text{lives per century} = \text{number of future lives}\) and in so doing, one commits the crime of \(\text{false linearity}\). This we are encouraged to view as an exercise in uncertainty quantification, but I just see it as making a mistake six times rather than once6. This is not an improvement.

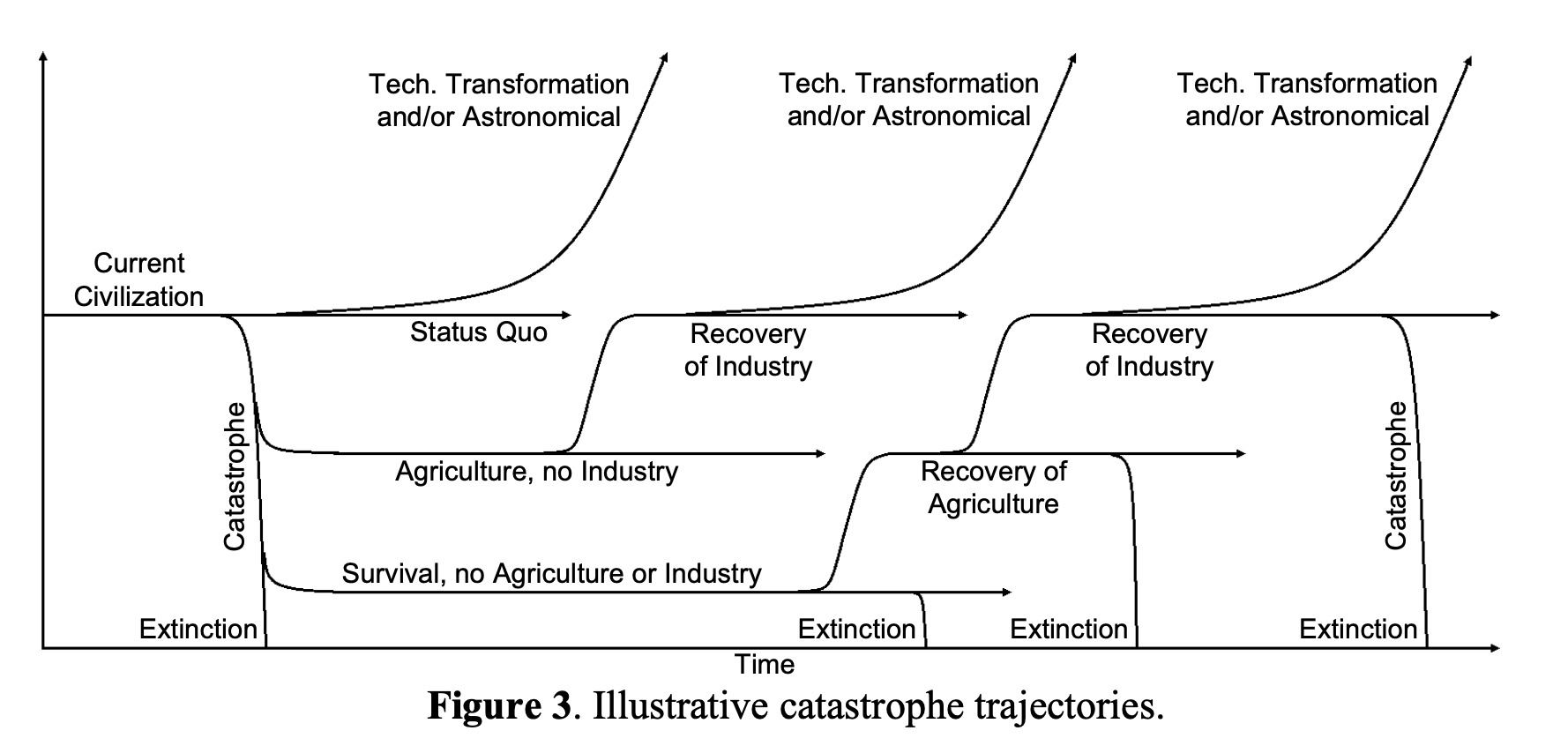

A figure showing “unconditional prophecies” from an FHI paper “Long-Term Trajectories of Human Civilization.”. The y-axis is unlabeled by the authors. (“Note that Figure 1 does not label the vertical axis. Instead, it uses an unspecified aggregate measure of human civilization.”)

Not-so-complex cluelessness

While dedicating your life to working on impossibilities and paradoxes might be a little silly, working to undermine the scientific method itself is something else entirely. This is the case with Hilary Greaves’ work on “complex cluelessness”, which was brought to my attention by Jack Malde, a development economist who is worried by Greaves’ critique and has lost confidence in GiveWell as a result:

On giving too much credit to GiveWell: let me first say I applaud GiveWell for their analysis. They’ve done a great job at estimating short-term effects of their charities. But they really are missing a load of highly relevant and important data on indirect effects / longer-term effects that are likely to be vast, could realistically be negative in sign (think climate change effects, effects on animal welfare etc.), and could feasibly outweigh the short term effects.

Therefore I don’t think we can be confident that giving to GiveWell charities is actually good. This is what Greaves calls complex cluelessness (also see here). I raised this point in a comment on Ben’s post on the EA Forum and didn’t really get an adequate answer … Overall this means I’m not sure going with GiveWell is the answer, and this is coming from someone who is currently working as an empirical economist.

Fortunately, Greaves’ argument is built upon a simple misconception about the role of evidence in science. But before getting to that, it’s first worth summarizing her widely-circulated talk here in full.

~

In the first part, after a perfunctory nod to the importance of evidence and effectiveness, Hilary shares a personal story of being plagued by the “usual old chestnut worries about aid not working: culture of dependency, wastage and so forth” during dinner with a student:

I was so plagued by these worries, by ineffectiveness, that I basically wasn’t donating more than 10 or 20 pounds a month at that point. And it was when my student turned round to me and said, basically: GiveWell exists; there are people who have paid serious attention to the evidence, thought it all through, written up their research; you can be pretty confident of what works actually, if you just read this website. That, for me, was the turning point. That was where I started feeling “OK, I now feel sufficiently confident that I’m willing to sacrifice 10 percent of my salary or whatever it may be”.

Her confidence didn’t last long, however, and the worries quickly returned. Her central concern is this: If we want to know what actually works, we need to pay close attention to “the evidence”. But “evidence” can only take us so far. Sure, we can look at “the data” that tells us how many deaths are averted per dollar spent on bed net distribution, but no amount of “evidence” can account for the knock-on effects and side effects of a given action between now and forever. Sure, educating a child might seem like a good thing to do, but what if the child grows up to be Hitler?

So you have some intervention, (say) whose intended effect is an increase in child years spent in school. Increasing child years spent in school itself has downstream further consequences not included in the basic calculation. It has downstream consequences, for example, on future economic prosperity. Perhaps it has downstream consequences on the future political setup in the country. (emphasis added)

No amount of so-called “cost-effectiveness analyses” could ever account for these unmeasurable down-stream ripple effects, about which she observes three key things:

- The unmeasured effects are greater in aggregate than the measured effects.

- These further future events are much harder to estimate.

- These matter just as much as the near-term effects.

What do we get when we put all three observations together? More worry:

What do we get when we put all those three observations together? Well, what I get is a deep seated worry about the extent to which it really makes sense to be guided by cost-effectiveness analyses of the kinds that are provided by meta-charities like GiveWell. If what we have is a cost-effectiveness analysis that focuses on a tiny part of the thing we care about, and if we basically know that the real calculation - the one we actually care about - is going to be swamped by this further future stuff that hasn’t been included in the cost-effectiveness analysis; how confident should we be really that the cost-effectiveness analysis we’ve got is any decent guide at all to how we should be spending our money? (emphasis added)

As a friend observed, this is like saying “we know that feeding this child right now will prevent him from starving, but his great great great great great grandchild might end up enslaving us in an white egg-shaped gadget, so let’s not do it”.

This she calls “complex cluelessness”, but I just call it “not being able to see into the future.”

~

In the last part of the talk, we’re treated to five possible responses to complex cluelessness:

- Make the cost-effectiveness analysis a little bit more sophisticated. Conclusion: Doesn’t work.

- Give up effective altruism. Conclusion: Definitely doesn’t work.

- Do a very bold analysis. Conclusion: Nope, doesn’t work.

- Ignore things we can’t estimate. Conclusion: Very tempting, but no.

- Go longtermist. Conclusion: Yahtzee.

That last one is the one Greaves’ is “probably most sympathetic to”, and in response five, Greaves’ tantalizingly foreshadows that she has an answer to how longtermism gets us out of the complex cluelessness conundrum:

Considerations of cluelessness are often taken to be an objection to longtermism because, of course, it’s very hard to know what’s going to beneficially influence the course of the very far future on timescales of centuries and millennia. … However, what my own journey through thinking about cluelessness has convinced me, tentatively, is that that’s precisely the wrong conclusion. And in fact, considerations of cluelessness favour longtermism rather than undermining it. (emphasis added)

The reader leans forward in eager anticipation. The argument?

Why would that be? Well … the majority of the value of funding things like bed net distribution comes from their further future effects. However, in the case of [funding bed nets], we find ourselves really clueless about … the value of those further future effects.

…(W)hat kinds of interventions might have [the property where we have some clue about the effects of our actions until the end of time?] … (T)ypically things [that longtermists fund]. … (T)he thought being that if you can reduce the probability of premature human extinction, even by a tiny little bit, then in expected value terms, given the potential size of the future of humanity, that’s going to be enormously valuable.

(Heavily edited for clarity. See original text here, Response Five, paragraphs 4 - 6.)

What we get, disappointingly, is a circularity - longtermism gets us out of cluelessness because longtermists are convinced it does. (“And if we want to know what kinds of interventions might have that property, we just need to look at what people, in fact, do fund in the effective altruist community when they’re convince that longtermism is the best cause area. They’re typically things like reducing the chance of premature human extinction… “)

After scouring her formal paper and talk on this subject, I have yet to find a non-circular argument for this crucial step (but invite readers to point me in the right direction in the comments below).

For an optimistic alternative to the self-induced moral paralysis which is “complex cluelessness”, I refer the reader to Ben Chugg’s excellent piece: Moral Cluelessness Is Not An Option.

The role of data and evidence in science

Earlier, I said that Greaves’ argument is built upon a simple misconception about the role of evidence in the sciences. This can be seen in the first part of her talk, where she speaks on the importance of evidence:

The world is a complicated place. It’s very hard to know a priori which interventions are going to cause which outcomes. We don’t know all the factors that are in play, particularly if we’re going in as foreigners to try and intervene in what’s going on in a different country.

And so if you want to know what actually works, you have to pay a close attention to the evidence. Ideally, perhaps randomised controlled trials. This is analogous to a revolution that’s taken place in medicine, for great benefit of the world over the past 50 years or so, where we replace a paradigm where treatments used to be decided mostly on the basis of the experience and intuition of the individual medical practitioner. We’ve moved away from that model and we’ve moved much more towards evidence-based medicine, where treatment decisions are backed up by careful attention to randomised controlled trials.

Much more recently, in the past ten or fifteen years or so, we’ve seen an analogous revolution in the altruistic enterprise spearheaded by such organisations as GiveWell, which pay close attention to randomised controlled trials to establish what works in the field of altruistic endeavour. (emphasis added)

I have highlighted the mistake in bold. “Evidence” isn’t some magic substance that can tell us what will “actually work” between now and eternity - it can only tell us what doesn’t work. In other words, no amount of evidence can let us see into the future, but that’s nothing to worry about, because that’s not what we use evidence for anyway.

So what are we actually learning when we run RCT’s? Let’s say you develop a new ginger candy to cure headaches. You give it to a few headachy friends, who then report feeling better. Can we say the ginger candy is an effective treatment for headaches? Well, no - placebo effect, duh.

So to account for this, you might instead get forty thousand of your closest friends together, and randomly allocate them into two separate treatment groups, controlling for age, gender, height, weight, and so on. To the first group you’ll administer your ginger candy treatment, and to the second, you’ll administer a special placebo candy, which tastes exactly like your ginger candy, but made with artificial ginger flavoring instead. All respondents diligently report back, and lo-and-behold, you discover both groups report the same decline in headaches. Bummer, turns out your treatment wasn’t effective after all.

In other words, your theory has been falsified. What you thought was an effective treatment for headaches turned out to be mistaken, but luckily, the RCT caught it.

Now, imagine running the same trial, except with Tylenol® Extra Strength, which provides clinically proven, fast, effective pain relief today. Your forty thousand friends report back, and Hey! this time there is a difference in reported headaches between the treatment group and the trial group.

Can we say that the RCT has proven that Tylenol® Extra Strength provides clinically proven, fast, effective pain relief today? No, we cannot. What we have learned from running the RCT is not that Tylenol “actually works”, but rather, that the underlying pharmacological theory relating acetaminophen to pain relief has withstood another round of falsification. The ginger-cures-headaches theory has been falsified, while the acetaminophen-cures-headaches theory has not, and in this way we have made progress.

~

So often our preferred theories turn out not to work, and RCT’s are a means by which we check. One example of a theory which doesn’t work is given by Greaves’ at the beginning of her talk, where she proposes a theory to explain why teenage girls aren’t attending school in poor rural areas:

Maybe their period is what’s keeping many teenage girls away from school. If so, then one might very well think distributing free sanitary products would be a cost-effective way of increasing school attendance. But at least in one study, this too turns out to have zero net effect on the intended outcome. It has zero net effect on child years spent in school. That’s maybe surprising, but that’s what the evidence seems to be telling us. So many well-intentioned interventions turn out not to work.

So, like the ginger-cures-headaches theory, the free-sanitary-products-increase-school-attendance theory was also falsified. But rather than viewing this as an ordinary part of scientific progress, a defeated and worried Greaves’ throws up her hands and declares herself clueless:

I don’t know whether I’m going to increase or decrease future population. And secondly, even if I did, even if I knew, let’s say for the sake of argument, that I was going to be increasing future population size, I don’t know whether that’s going to be good or bad. There are very complicated questions here. I don’t know what the effect is of increasing population size on economic growth. I don’t know what the effect is on tendencies towards peace and cooperation versus conflict.

And crucially, I don’t know what the effect is of increasing population size on the size of existential risks faced by humanity (that is, chances that something might go really catastrophically wrong, either wiping out the human race entirely, or destroying most of the value in the future of human civilisation).

So, what I can be pretty sure about is that once I’ve thought things through, there will be a non-zero expected value effect in that further future; and that will dominate the calculation. But at the moment, at least, I feel thoroughly clueless about even the sign, never mind the magnitude, of those further future effects.

Nobody knows any of this stuff, because again, we cannot see into the future. Data, evidence, randomized controlled trials - these are not prophetic crystal balls that can tell us the consequences of our actions thousands of years from now - they are a means of falsifying hypotheses, that’s it. And how does longtermism solve this lack-of-knowledge problem again?

Conclusion

It seems to me the first principle of the study of any belief system is that its ideas and terms must be stated in terms other than its own; that they must be projected on to some screen other than one which they themselves provide. They may and must speak, but they must not be judges in their own case. For concepts, like feelings and desires, have their cunning. Only in this way may we hope to lay bare the devices they employ to make their impact – whether or not those devices are, in the end, endorsed as legitimate. (emphasis added)

- Ernest Gellner, The Psychoanalytic Movement: The Cunning of Unreason

In concluding this investigation into bayesian epistemology and it’s consequences, it’s worth revisiting a subject I only hinted at briefly in the first post of this series, with my vague allusions to ‘the framework’. If you recall, in A Case Against Strong Longtermism, I made a number of points:

-

Longtermism is a Utopianist “means justifies the ends” philosophy. Shockingly, at least one of my critics “bit the bullet” here, acknowledging that

Various historical cases suggest that … attempts to enact grand, long-term visions often have net negative effects… In practice, strong longtermism is more likely to be bad for the near-term than it should be in theory.

- Longtermism encourages people to move their donations away from charities like Helen Keller International and the Malaria Consortium, and towards ever-more-outlandish sci-fi scenarios. This is best exemplified by the order of priorities in EA’s Current List Of Especially Pressing World Problems.

- The reasoning deployed in The Case For Strong Longtermism (TCFSL) could be used to attack any non-longtermist charity whatsoever.

- Academic jargon was being used as a smokescreen, making the ideas hard to understand, and thus hard to criticize.

- Objective and subjective probabilities are (to borrow Popper’s phrase) radically different things, and should not be compared. One comes from data, while the other is entirely made up.

- Related: In TCFSL, as a means of invalidating GiveWell’s empirical findings, numbers were conjured out of thin air and compared against GiveWell’s effectiveness estimates.

- The foundational assumptions in TCFSL were flawed.

- Expected values were being used by the authors of inappropriately (that is, without data to inform the probability estimates).

- Karl Popper proved predicting the future of human civilization was impossible.

And with regards to ‘the framework’, I wrote:

And so it’s worth holding in mind the idea that the framework itself might be the problem, when reading defenses of longtermism written by those reluctant to step outside of, or even notice, the framework in which they are imprisoned.

The results are in, and we can now look at some examples of what I meant here. By ‘framework’ I meant bayesian epistemology, and examples of an unwillingness to step outside of it look like the responses I received to the first post of this series. (For the data scientists in the audience, “bayesian epistemology” can be thought of as bayesian statistics, minus the data).

Most of the responses to the nine bullets above took the form: “Yes, but you don’t understand, we have to use expected values to predict the long-term future, because…”

- If we don’t, it leads to absurdities (see: Proving Too Much)

- If we don’t, we violate the inviolable Laws of Rationality (see: The Credence Assumption)

- If we don’t, we’ll have to throw our hands up in the air and do nothing. Also, climate change. (see: The Poverty of Longtermism)

and I anticipate the response to this piece being something like:

“Longtermism is nothing like historicism! Of course we know we can’t predict the future! Of course we know about the fallacy of false linearity, and the difference between laws and trends! You don’t understand, this time it’s different! Unlike historicists of the past, we phrase our predictions in terms of expected values!.”

- future critic, probably

In The Psychoanalytic Movement: The Cunning of Unreason, Ernest Gellner notes that closed belief systems often make use of an intellectual “device”, some idea with a “long, venerable philosophical history”, which has the power to confuse or bewilder those who have not yet learned how to use the device on others themselves. In the case of longtermism, expected values are this device, and this series of posts represents my best attempt at laying this device bare.

Appendix

What’s going on with climate forecasts?

To answer this, we turn to Nate Silver’s excellent book The Signal and The Noise - specifically Chapter 12, A Climate Of Healthy Skepticism. To set the stage, Silver first distinguishes between meteorology, which is about predicting the weather a few days from now, and climatology, which is about predicting long-term patterns in weather over time.

About meteorology, Silver says it is one of the great success stories in the history of prediction, as well as the history of science more generally, having spawned the entire discipline of choas theory. “Through hard work, and a melding of computer power with human judgment,” says Silver “weather forecasts have become much better than they were even a decade or two ago.”

Unfortunately the same can not be said of climatology. To explain, Silver describes what lead to advancements in the meteorlogical setting. He attributes the success to two factors.

- Meteorologists have data, collected daily, which provides a feedback signal that can be used to test the accuracy of various statistical models. However, “this advantage is not available to climate forecasters and is one of the best reasons to be skeptical about their predictions, since they are made at scales that stretch out to as many as eighty or one hundred years in advance.” (my emphasis).

- Meteorologists have knowledge about the physical structure under consideration, which can then be encoded into a computer program and run to create a “simulation of the physical mechanics of some portion of the universe.” These models are more difficult to construct than statistical models, but can be much more accurate, and “statistically driven systems are now used as little more than the baseline to measure these more accurate forecasts against.”

When climatologists have that information available, they can make predictions using similar techniques, and have, for example, successfully forecast the trajectories of hurricanes using knowledge of cloud formation. But in many instances, such as predictions that “are made at scales that stretch out to as many as eighty or one hundred years in advance”, this information is simply not available, basically rendering these predictions meaningless. I refer the reader especially to the subsection titled A Forecaster’s Critique of Global Warming Forecasts for further discussion.

(As an aside: it would sure be nice if these climate models were made open source, so we could all look at how the predictions are made.)

~

Turning next to the IPCC. In The Signal and The Noise, Silver discusses how of all the multiple IPCC reports’ multiple findings, only two do IPCC scientists classify as absolutely certain. Notably, these findings are based on knowledge of physical laws - or, in Silver’s words, “relatively simple science that had been well-understood for more than 150 years and which is rarely debated even by self-described climate skeptics.” He continues,

The IPCC’s first conclusion was simply that the greenhouse effect exists:

There is a natural greenhouse effect that keeps the Earth warmer than it otherwise would be.

The Greenhouse Effect is the process by which certain atmospheric gases — principally water vapor, carbon dioxide, methane, and ozone — absorb solar energy that has been reflected from the earth’s surface. (…)

The IPCC’s second conclusion made an elementary prediction based on the greenhouse effect:

As the concentration of greenhouse gases increased in the atmosphere, the greenhouse effect and global temperatures would increase along with them.

(…)7

- Nate Silver, The Signal and the Noise, p.356-358

where Silver notes that the second conclusion is supported by data (rising CO2 levels) and basic highschool science (burning fossil fuels produces CO2). In the subsection titled A Forecaster’s Critique of Global Warming Forecasts, Silver show that long term climate forecasters lack this information, and provides data showing they are unsuccessful as a result:

(T)he complexity of the global warming problem makes forecasting a fool’s errand. “There’s been no case in history where we’ve had a complex thing with lots of variables and lots of uncertainty, where people have been able to make econometric models or any complex models work,” Armstrong told me. “The more complex you make the model the worse the forecast gets.” …

A survey of climate scientists conducted in 2008 found that almost all (94 percent) were agreed that climate change is occurring now, and 84 percent were persuaded that it was the result of human activity. But there was much less agreement about the accuracy of climate computer models. The scientists held mixed views about the ability of these models to predict global temperatures, and generally skeptical ones about their capacity to model other potential effects of climate change. Just 19 percent, for instance, thought they did a good job of modeling what sea-rise levels will look like fifty years hence.

- Nate Silver, The Signal and the Noise, p.364-366, (emphasis added)

So, to conclude - The strongest of the IPCC findings consist of modest predictions, backed by physical law, supported by observation, and requiring only relatively weak assumptions based on well established scientific principles such as the Greenhouse Effect and the Clausius–Clapeyron relation, as well as known properties of water vapor, carbon dioxide, methane, and ozone. What, pray tell, are the analogous laws governing longtermist predictions?

:-)( pic.twitter.com/upVkkhk3EX

— Moshe Vardi (@vardi) July 21, 2021

Footnotes

-

The reason we cannot predict the growth of human knowledge is nicely explained by Alasdair MacIntyre in After Virtue:

Some time in the Old Stone Age you and I are discussing the future and I predict that within the next ten years someone will invent the wheel. ‘Wheel?’ you ask. ‘What is that?”’ I then describe the wheel to you, finding words, doubtless with difficulty, for the very first time to say what a rim, spokes, a hub and perhaps an axle will be. Then I pause, aghast. But no one can be going to invent the wheel, for have just invented it.”

In other words, the invention of the wheel cannot be predicted. For a necessary part of predicting an invention is to say what wheel IS; and to say what a wheel IS just IS to invent it. It is easy to see how this example can be generalized. Any invention, any discovery, which consists essentially in the elaboration of a radically new concept cannot be predicted, for a necessary part of the prediction is the present elaboration of the very concept whose discovery or invention was to take place only in the future. The notion of the prediction of radical conceptual innovation is itself conceptually incoherent.

- After Virtue,

-

The word “sociology” now has associations with a particular strand of campus politics, but here Popper simply means “the study of the development, structure, and functioning of human society.” ↩

-

See in particular Chapter 27: Is There a Law of Evolution? Laws and Trends. ↩

-

“Assuming another asteroid doesn’t collide with Halley’s Comet along its route”, “Assuming we’re not mistaken about our current laws of gravitation”, “Assuming space aliens don’t appear and destroy Uranus in the next million years”, “Assuming the sun doesn’t explode” … ↩

-

Indeed, using extrapolated trends as the basis for long-term forecasting has resulted in ‘some of the best-known failures of prediction’ made over the last half century. Every book on forecasting I could find warns of some variation of this error. For example, here’s Bayesian statistician Nate Silver:

The Dangers of Extrapolation

Extrapolation is a very basic method of prediction—usually, much too basic. It simply involves the assumption that the current trend will continue indefinitely, into the future. Some of the best-known failures of prediction have resulted from applying this assumption too liberally.

- Nate Silver, The Signal and The Noise, p. 203

Or here’s Nassim Nicholas Taleb, on the danger of extrapolating trends using linear models (i.e straight lines):

With a linear model, that is, using a ruler, you can run only a straight line, a single straight line from the past to the future. The linear model is unique. There is one and only one straight line that can project from a series of points. But it can get trickier. If you do not limit yourself to a straight line, you find that there is a huge family of curves that can do the job of connecting the dots. If you project from the past in a linear way, you continue a trend. But possible future deviations from the course of the past are infinite.

- Nassim Nicholas Taleb, The Black Swan, p.188 (emphasis added)

How about Bayesian Superforecaster Philip Tetlock? While Tetlock disagrees with Taleb about the feasibility of short-term predictions, they fully agree that no one, not even super-intelligent super-human super-forecasters, can successfully predict the long-term future of complex systems like geopolitics, economics, and by extension, human civilization writ large:

Taleb, Kahneman, and I agree there is no evidence that geopolitical or economic forecasters can predict anything ten years out beyond the excruciatingly obvious — “there will be conflicts” — and the odd lucky hits that are inevitable whenever lots of forecasters make lots of forecasts. These limits on predictability are the predictable results of the butterfly dynamics of nonlinear systems.

- Philip Tetlock, Superforecasters, p. 240. (emphasis added)

Tetlock goes on to refer to “absurdities like twenty-year geopolitical forecasts and bestsellers about the coming century” (What must he think of The Precipice?) and his first “Commandment for Aspiring Superforecasters” is simple: Don’t attempt to predict the unpredictable.

Focus on questions where your hard work is likely to pay off. Don’t waste time either on easy “clocklike” questions (where simple rules of thumb can get you close to the right answer) or on impenetrable “cloud-like” questions (where even fancy statistical models can’t beat the dart-throwing chimp). Concentrate on questions in the Goldilocks zone of difficulty, where effort pays off the most.

For instance, “Who will win the presidential election, twelve years out, in 2028?” is impossible to forecast now. Don’t even try. Could you have predicted in 1940 the winner of the election, twelve years out, in 1952? If you think you could have known it would be a then-unknown colonel in the United States Army, Dwight Eisenhower, you may be afflicted by one of the worst cases of hindsight bias ever documented by psychologists.

- Philip Tetlock, Superforecasters, p. 271. (emphasis added)

-

And additionally, having made a mistake, the appropriate response is to rectify it, not adjust one’s “credences” to compensate, as the authors proceed to do in the subsequent paragraphs. ↩

-

I abridged this quote significantly. The full text reads:

The IPCC’s second conclusion made an elementary prediction based on the greenhouse effect: as the concentration of greenhouse gases increased in the atmosphere, the greenhouse effect and global temperatures would increase along with them:

Emissions resulting from human activities are substantially increasing the atmospheric concentrations of the greenhouse gases carbon dioxide, methane, chlorofluorocarbons (CFCs) and nitrous oxide. These increases will enhance the greenhouse effect, resulting on average in additional warming of the Earth’s surface. The main greenhouse gas,water vapor, will increase in response to global warming and further enhance it.

This IPCC finding makes several different assertions, each of which is worth considering in turn.

- First, it claims that atmospheric concentrations of greenhouse gases like CO2 are increasing, and as a result of human activity. This is a matter of simple observation. Many industrial processes, particularly the use of fossil fuels, produce CO2 as a by-product.18 Because CO2 remains in the atmosphere for a long time, its concentrations have been rising: from about 315 parts per million (ppm) when CO2 levels were first directly monitored at the Mauna Loa Observatory in Hawaii in 1959 to about 390 PPM as of 2011.19

- The second claim, “these increases will enhance the greenhouse effect, resulting on average in additional warming of the Earth’s surface,” is essentially just a restatement of the IPCC’s first conclusion that the greenhouse effect exists, phrased in the form of a prediction. The prediction relies on relatively simple chemical reactions that were identified in laboratory experiments many years ago. The greenhouse effect was first proposed by the French physicist Joseph Fourier in 1824 and is usually regarded as having been proved by the Irish physicist John Tyndall in 1859,20 the same year that Charles Darwin published On the Origin of Species.

- The third claim—that water vapor will also increase along with gases like CO2, thereby enhancing the greenhouse effect—is modestly bolder. Water vapor, not CO2, is the largest contributor to the greenhouse effect. If there were an increase in CO2 alone, there would still be some warming, but not as much as has been observed to date or as much as scientists predict going forward. But a basic thermodynamic principle known as the Clausius–Clapeyron relation, which was proposed and proved in the nineteenth century, holds that the atmosphere can retain more water vapor at warmer temperatures.Thus, as CO2 and other long-lived greenhouse gases increase in concentration and warm the atmosphere, the amount of water vapor will increase as well, multiplying the effects of CO2 and enhancing warming.

- Nate Silver, The Signal and the Noise, p. 356 - 358